Transforming global finance systems at Western Union

I transformed Western Union’s legacy ETL into a real-time, streaming data platform powered by Kafka, Spark, and Flink—enabling rapid fraud detection, global AML compliance, and dynamic analytics for 1,500+ stakeholders, while ensuring high-fidelity processing of over 200 million transactions each month.

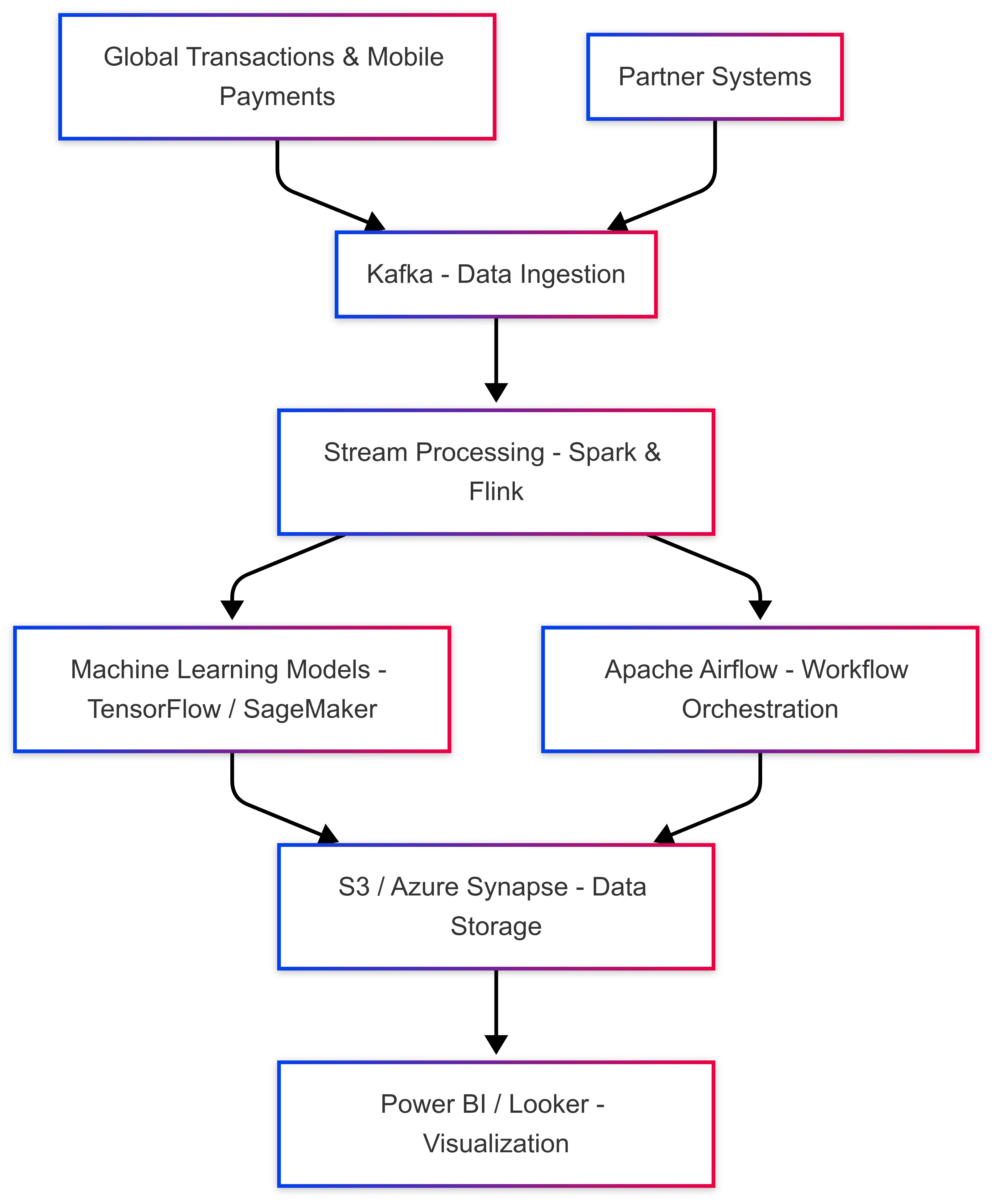

Components

Data Ingestion (Kafka):

Handles real-time ingestion of global transactions, mobile payments, and partner data, maintaining low latency and fault tolerance.

Stream Processing (Spark & Flink):

Spark performs real-time fraud pattern detection and currency conversion enrichment. Flink handles complex event correlation and temporal aggregations across regions.

Machine Learning (TensorFlow + SageMaker):

ML models classify transaction risk levels, detect anomalies, and retrain continuously on recent patterns. Models were deployed with real-time inference endpoints.

Orchestration (Apache Airflow):

Schedules daily training, stream validations, and batch reporting. Airflow DAGs coordinate with Spark and SageMaker to ensure timely updates.

Storage (S3 + Azure Synapse):

Combines AWS S3 for raw logs and real-time storage with Azure Synapse for cross-border reporting and long-term trend analysis.

Visualization (Power BI + Looker):

Dashboards for 1,500+ stakeholders across compliance, operations, and analytics teams visualize fraud scores, transaction heatmaps, and cross-border flow summaries.

Key Achievements

- Migrated legacy ETL into a streaming-first architecture, reducing data latency by 85%.

- Enabled sub-minute fraud detection across all regions using Flink CEP and real-time ML scoring.

- Automated AML compliance checks and country-specific regulatory reporting.

- Supported 200M+ transactions/month with <5s end-to-end visibility in dashboards.

- Delivered scalable analytics to 1,500+ global users via Power BI and Looker.

Airflow DAG Example

from airflow import DAG

from airflow.providers.apache.flink.operators.flink import FlinkOperator

from airflow.providers.amazon.aws.operators.sagemaker import SageMakerTrainingOperator

from datetime import datetime, timedelta

default_args = {

'owner': 'global_data_team',

'start_date': datetime(2025, 1, 1),

'retries': 2,

'retry_delay': timedelta(minutes=10),

}

with DAG('western_union_pipeline',

default_args=default_args,

schedule_interval='@hourly',

catchup=False) as dag:

detect_fraud = FlinkOperator(

task_id='flink_fraud_detection',

job_name='fraud-detection',

flink_app_jar='/opt/flink/jobs/fraud-detection.jar',

conf_file='/opt/flink/conf/application.conf',

)

train_model = SageMakerTrainingOperator(

task_id='train_risk_model',

config={...}, # SageMaker config omitted for brevity

aws_conn_id='aws_default',

)

detect_fraud >> train_model

Summary

We transformed Western Union’s global finance pipelines to enable real-time analytics and machine learning across critical financial workflows.